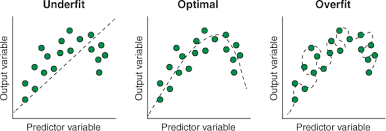

- Underfitting is opposite to overfitting hence it will not work on training as well as test dataas it has not understood well. This is similar to scenario where a student gives exam with less preparation.

- To remove underfittingwe should give more data to the model so that it can learn with deeper knowledge.

- High bias & High variance

- High bias: For training data error is High

- High variance: For test data error is high

Methods to avoid Underfitting:

- Increase model complexity.

- Increase number of features, performing feature engineering.

- Remove noise from the data.

- Increase the number of epochs or increase the duration of training to get better results.

Overfitting :

- The clear sign of Overfitting is ” when the model accuracy is high in the training set, but the accuracy drops significantly with new data or in the test set”. This means the model knows the training data very well, but cannot generalize. This case makes your model useless in production.

- Low bias & High variance

- Low bias: For training data error is low

- High variance: For test data error is high

Methods to avoid Overfitting:

- Cross-Validation

- Early Stopping

- Pruning

- Regularization